Shifting the Supply and Demand for International Large Scale Assessments

This NORRAG Highlights is contributed by Ji Liu, Professor of Comparative Education and Economics of Education at Shaanxi Normal University and Senior Research Associate at NORRAG and Gita Steiner-Khamsi, Professor of Education at Teachers College of Columbia University, Professor in Interdisciplinary Programmes at the Graduate Institute, and Director of NORRAG. They discuss their new research article, in which they scratch at the formula used by the World Bank to calculate the difference between the expected years of school and learning-adjusted years of schooling because it relies on standardized testing in schools. They show why it is problematic to penalize countries that have chosen not to participate in large-scale student assessments.

Around the world today, more countries are making their students participate in International Large Scale Assessments (ILSAs) than ever before. In fact, more than 600,000 15-year-olds in more than 75 economies took part in the most recent round of Programme for International Student Assessment (PISA 2018), while over 60 countries/territories tested in Trends in International Mathematics and Science Study (TIMSS 2019). This spectacular growth in ILSA participation is not only a result of niche marketing of national competitiveness and skills competency, but also a reflection of burgeoning anxiety of government technocracy and the shiny glitters of ranking appeal. On the supply-side, the OECD, IEA, and the global testing industry have created discourse incentives that ensure mass participation. On the demand-side, globalization and political pressure to ‘fit in’ have kept countries on seats’ edge anticipating every PISA press release day.

However, in our new research article, we show that this equilibrium is about to shift.

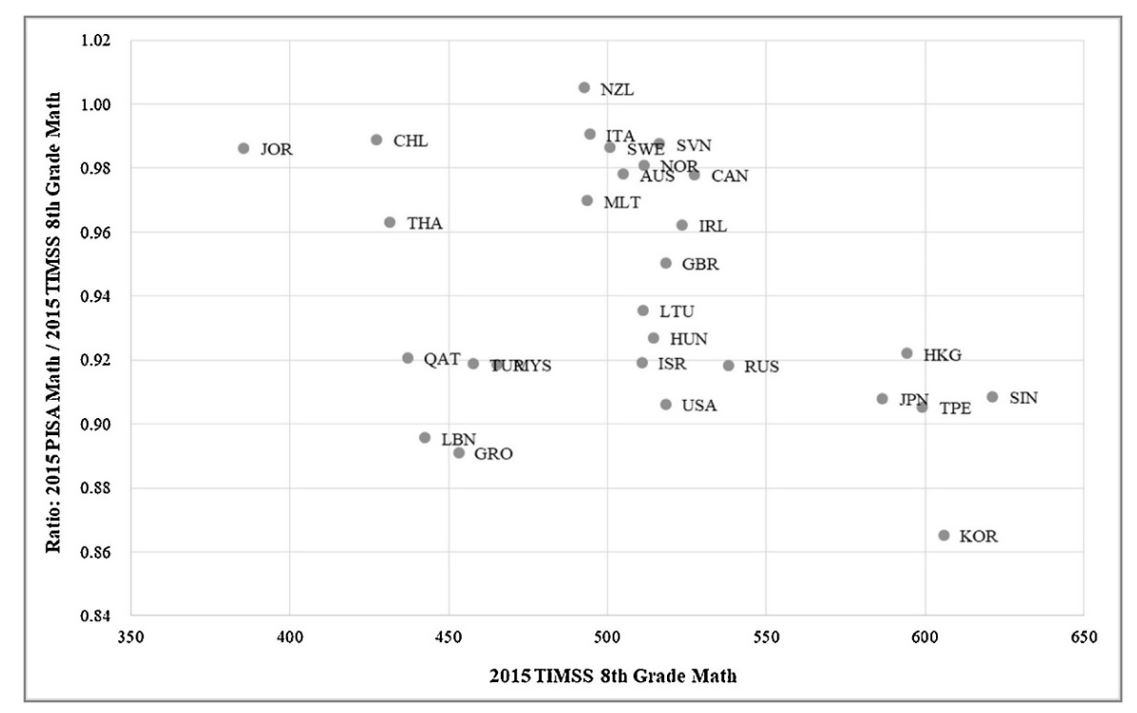

A third-party on the ILSA supply and demand chart, the World Bank, recently put in a large bet on ILSAs as the answer to addressing #learningcrisis globally. Late 2018, the Bank released its Human Capital Index (HCI) aiming to make visible and reduce #learningpoverty. The objective of the HCI is to provide a “direct measure of school quality and human capital,” and acting as barometer for its many stick and carrot lending programs. Technically speaking, the Bank harmonizes different ILSA and regional assessment scores into one single scale by producing a ratio-linking ‘learning exchange rate’ that is based on test-overlap systems. More simply, HCI relies on systems that took part in multiple ILSAs or regional assessments to convert scores for those that did not fully participate, or for those that have chosen not to engage at all. In our study, we empirically show that this ‘learning exchange rate’ fluctuates greatly, and can induce as much as a 70-point difference on the imputed scale for “a typical 500-point” system, depending on what is considered test-overlapped (see Figure 1). This large undesired variation poses significant concern, especially considering the multiplier effect embedded in all extrapolation exercises.

Figure. 1. ‘Learning Exchange Rate’ among Test-Overlap Systems, PISA and TIMSS 2015.

Source: Liu & Steiner-Khamsi, 2020

There are many explanations for this volatility, any of which would raise eyebrows regarding the Bank’s harmonization. In our study, we summarize extensive literature on the systemic issue of ILSA (in)comparability.

- First and foremost, ILSAs and regional assessments are run by different testing agencies and vary in objective, domain, and design. As a case in point, there exists two distinct classes of test programs, one that primarily focuses on evaluating skills, literacy, and competencies (e.g. PISA, PIRLS), and another that assesses learning outcomes that are more closely aligned with curriculum input (e.g. IMSS, SACMEQ). When test objective, domain, and design differences are compounded together, they introduce substantial uncertainty on inferences.

- Secondly, participating countries, target population, and sampling approach vary drastically by test program. For instance, PISA includes primarily a large cluster of the world’s most prominent economies (OECD members and invited OECD partner systems), whereas TIMSS and PIRLS participants include many developing countries, and regional tests such as LLECE, SACMEQ, and EGRA are geographically exclusive.

- Thirdly, the salience of school quality and non-school factors vary considerably across systems. There is a large set of studies indicating that ILSA score differences result from a combination of underlying family-, cultural-, and measurement-related factors that are arguably independent of school quality. For instance, child development conditions at home vary substantially across systems, especially family educational expectations and private education spending.

- Fourthly, there are considerable differences in measurement technology and technical standards among test programs. To this end, substantial differences in results exist between ‘paper & pencil’ and computer-administered tests. All students on PISA 2018 participated in computer-administered tests, while TIMSS will only gradually transition into computer-based tests beginning in 2019.

On the empirical side, we examine this (in)comparability issue further by cross-checking test sample characteristics. On the one hand, test-overlap systems are drastically different from those that are more selective in test-participation. For instance, Botswana (the only test-overlap country in SACMEQ 2013 and TIMSS 2011), is twice as wealthy and provides one additional year of formal primary education, compared to ‘SACMEQ Only’ and ‘TIMSS Only’ systems. On the other hand, we show that even within the same test system and in the same test year, different ILSAs choose drastically different test samples. On average, the target population coverage difference is 11.3 percentage-points and sample age mean difference is 1.6 years, for PISA 2015 and TIMSS 2015 overlapped systems (see Table 3).

As byproduct of the Bank’s crude methodology in harmonizing ILSA scores, this stylized approach generates new penalties that may coerce partial- and non-participants to rethink their decisions. Importantly, our results indicate that test participation type alone accounts for about 58 percent of the variation in Bank harmonized scores, and that score penalties associated with partial ILSA participation equates to at least one full year of learning (see Table 4). Even more troublingly, the majority of partial- and non-ILSA participants are low- and lower-middle income countries. This Bank-imposed, participation-contingent score penalty inevitably affects decisions in choosing to participate in one test over another, or not participating at all; and their decisions will only be further exacerbated if the Bank chooses to use this information as an instrument to assess new or reevaluate its current lending programs.

To our knowledge, our new study is the first of its kind to bring attention to the problematic nature of the Bank’s HCI algorithm and to highlight its troubling consequences in shifting country-ILSA dynamics. The main message from our findings is clear: improving learning is critical, but the World Bank’s flawed harmonization of ILSA scores in effect penalizes governments that have chosen alternative nonstandardized pathways for measuring learning. In an era of global monitoring of education development, these “methodological glitches” have far-reaching political, social and economic consequences that need to be brought under scrutiny.

About the Authors: Ji Liu is Professor of Comparative Education and Economics of Education at Shaanxi Normal University, and Senior Research Associate at NORRAG. Gita Steiner-Khamsi is Professor of Education at Teachers College of Columbia University, Professor in Interdisciplinary Programmes at the Graduate Institute, and Director of NORRAG.

Contribute: The NORRAG Blog provides a platform for debate and ideas exchange for education stakeholders. Therefore, if you would like to contribute to the discussion by writing your own blog post please visit our dedicated contribute page for detailed instructions on how to submit.

Disclaimer: NORRAG’s blog offers a space for dialogue about issues, research and opinion on education and development. The views and factual claims made in NORRAG posts are the responsibility of their authors and are not necessarily representative of NORRAG’s opinion, policy or activities